By: Christian Groll

Re-posted from: http://grollchristian.wordpress.com/2014/09/05/sp500-data-download-julia/

In a previous post I already described how to download stock price data for all constituents of the SP500 with R. Meanwhile, however, I shifted all of my coding to the new and very promising technical computing language julia. I obviously wanted to also enjoy the convenience of downloading stock price data directly in julia. Hence, in this post I will describe how one could use julia to download stock price data from Yahoo!Finance in general, and – as an advanced use case – how to download adjusted closing prices for all SP500 constituents. We also will make use of julia’s parallel computing capabilities, in order to tremendously speed up the downloading and processing steps compared to my original code in R.

Before we start, we first need to make sure that we have all relevant packages installed. As a format for storing time series data I always rely on the TimeData package from the official package repository:

Pkg.add("TimeData")

The functions required for data download are bundled in the not yet registered EconDatasets package, which we can clone from github:

Pkg.clone("https://github.com/JuliaFinMetriX/EconDatasets.jl.git")

1 Download data using readYahooFinance

Now that all required packages are installed, we will first demonstrate a simple use case for the low-level function readYahooFinance. This functions allows easy access to the complete stock data provided by Yahoo!Finance, comprising fields open, high, low, close, volume and adjusted close. As an example, we now download data for NASDAQ-100, S&P 500 and EURO STOXX 50 Index.

using TimeData

using Dates

using EconDatasets

tickerSymbs = ["^NDX"

"^GSPC"

"^STOXX50E"]

dates = Date(1960,1,1):Date(2014,7,20)

indexData = [readYahooFinance(dates, symb) for symb in tickerSymbs]

## display first five dates of NASDAQ

indexData[1][1:5, :]

| idx | Open | High | Low | Close | Volume | Adj_Close |

| 1985-10-01 | 221.24 | 224.32 | 221.13 | 224.28 | 153160000 | 112.14 |

| 1985-10-02 | 224.28 | 225.08 | 221.56 | 221.65 | 164640000 | 110.82 |

| 1985-10-03 | 221.68 | 222.37 | 220.24 | 221.74 | 147300000 | 110.87 |

| 1985-10-04 | 221.74 | 221.74 | 219.71 | 220.15 | 147900000 | 110.07 |

| 1985-10-07 | 220.15 | 220.27 | 216.35 | 216.4 | 128640000 | 108.2 |

Using comprehension, the function readYahooFinance can be applied to all ticker symbols in an Array. The output will be an Array of type Any, with individual entries being of type Timematr.

When we focus on one variable for each stock only, we can store the data more concisely in a single TimeData object. Therefore, we join individual stocks at the their idx entries. We do not want to lose any data at this step, so that we will use an outer join in order to get a row for each date that occurs for at least one of the individual stocks. Missing values will be replaced by NA, so that we now get an object of type Timenum, as Timematr objects are not allowed to contain NAs.

adjCloseData = indexData[1][:Adj_Close]

names!(adjCloseData.vals, [symbol(tickerSymbs[1])])

for ii=2:3

nextStock = indexData[ii] |>

x -> x[:Adj_Close]

names!(nextStock.vals, [symbol(tickerSymbs[ii])])

adjCloseData = joinSortedIdx_outer(adjCloseData, nextStock)

end

adjCloseData[[1:10; (end-10):end], :]

| idx | ^NDX | ^GSPC | ^STOXX50E |

| 1950-01-03 | NA | 16.66 | NA |

| 1950-01-04 | NA | 16.85 | NA |

| 1950-01-05 | NA | 16.93 | NA |

| 1950-01-06 | NA | 16.98 | NA |

| 1950-01-09 | NA | 17.08 | NA |

| 1950-01-10 | NA | 17.03 | NA |

| 1950-01-11 | NA | 17.09 | NA |

| 1950-01-12 | NA | 16.76 | NA |

| 1950-01-13 | NA | 16.67 | NA |

| 1950-01-16 | NA | 16.72 | NA |

| 2014-07-04 | NA | NA | 3270.47 |

| 2014-07-07 | 3910.71 | 1977.65 | 3230.92 |

| 2014-07-08 | 3864.07 | 1963.71 | 3184.38 |

| 2014-07-09 | 3892.91 | 1972.83 | 3203.1 |

| 2014-07-10 | 3880.04 | 1964.68 | 3150.59 |

| 2014-07-11 | 3904.58 | 1967.57 | 3157.05 |

| 2014-07-14 | 3929.46 | 1977.1 | 3185.86 |

| 2014-07-15 | 3914.46 | 1973.28 | 3153.75 |

| 2014-07-16 | 3932.33 | 1981.57 | 3202.94 |

| 2014-07-17 | 3878.01 | 1958.12 | 3157.82 |

| 2014-07-18 | 3939.89 | 1978.22 | 3164.21 |

2 Download adjusted closing prices of SP500 constituents

Now that we already have seen a first use case of function readYahooFinance, we now want to try the capabilities of julia and the EconDatasets package with a more challenging task. Hence, we want to download adjusted stock prices for all constituents of the SP500 in a fully automated way. Therefore, we first need to get a list of the ticker symbols of all constituents, which we can get from the S&P homepage. However, this list is stored as an Excel sheet with .xls extension, and we need to read in this binary file with package Taro.

To make Taro work, you first need to make sure that it is able to find Java on your system. If your path deviates from the default settings, just make sure to set the respective JAVA_LIB environment variable in your .bashrc file. In my case, the variable is set as follows:

# 64-bit machine # JAVA_LIB="/usr/lib/jvm/java-7-openjdk-amd64/jre/lib/amd64/server/" # 32-bit machine JAVA_LIB="/usr/lib/jvm/java-7-openjdk-i386/jre/lib/i386/server/" export JAVA_LIB

We can now install and load package Taro:

Pkg.add("Taro")

using Taro Taro.init()

Found libjvm @ /usr/lib/jvm/java-7-openjdk-i386/jre/lib/i386/server/

If something with your Taro configuration is not correct, you will get an error at this step. In this case, you could simply download and export the Excel sheet to .csv manually, which you then can read in with function readtable from the DataFrames package.

Otherwise, you can use Taro to download and read in the respective part of the Excel sheet:

url = "http://us.spindices.com/idsexport/file.xls?hostIdentifier=48190c8c-42c4-46af-8d1a-0cd5db894797&selectedModule=Constituents&selectedSubModule=ConstituentsFullList&indexId=340" filepath = download(url) constituents = Taro.readxl(filepath, "Constituents", "A10:B511") head(constituents)

| Constituent | Symbol |

| 3M Co | MMM |

| Abbott Laboratories | ABT |

| AbbVie Inc. | ABBV |

| Accenture plc | ACN |

| ACE Limited | ACE |

| Actavis plc | ACT |

We now should have name and ticker symbol of each SP500 constituent stored as a DataFrame. In my case, however, there even is one ticker symbol too much, although I do not know why:

(nTicker, nVars) = size(constituents)

| 501 |

| 2 |

An inconsistency that I will not further invest at this point. In addition, however, some of the ticker symbols are automatically read in as boolean values, and we will have to convert them to strings first. Let’s display all constituents with boolean values:

isBoolTicker = [isa(tickerSymbol, Bool) for tickerSymbol in

constituents[:Symbol]]

constituents[find(isBoolTicker), :]

| Constituent | Symbol |

| AT&T Inc | true |

| Ford Motor Co | false |

The reason for this is that the respective ticker symbols are “T” and “F”, which will be interpreted as boolean values. Once we did correct for this mistake, we transform the array of ticker symbols into an Array of type ASCIIString.

indTrue = find(constituents[2] .== true) indFalse = find(constituents[2] .== false) constituents[indTrue, 2] = "T" constituents[indFalse, 2] = "F" tickerSymb = ASCIIString[constituents[:Symbol]...] tickerSymb[1:5]

| MMM |

| ABT |

| ABBV |

| ACN |

| ACE |

Now that we already have a list of all ticker symbols, in principle we could apply the same procedure as before: download each stock, extract the adjusted closing prices, and join all individual price series. However, as we have 500 stocks, this procedure would already take approximately 15 minutes if each individual stock took only 2 seconds. Hence, we strive for a much faster result using julia’s parallel computing capabilities, and this is already implemented as function readYahooAdjClose.

Under the hood, readYahooAdjClose uses a map-reduce structure. As the map step, for any given ticker symbol we download the data, extract the adjusted closing prices and rename the column to its ticker symbol. As reduce step we need to specify some operation that combines the individual results of the map step – in our case, this is function joinSortedIdx_outer.

Let’s now set the stage for parallel computation, add three additional processes and load the required packages on each process.

addprocs(3) @everywhere using Dates @everywhere using DataFrames @everywhere using TimeData @everywhere using EconDatasets

3-element Array{Any,1}:

2

3

4

To run the parallelized code, simply call function readYahooAdjClose:

dates = Date(1960,1,1):Date(2014,7,20) ## measure time t0 = time() @time vals = readYahooAdjClose(dates, tickerSymb, :d) t1 = time() elapsedTime = t1-t0 mins, secs = divrem(elapsedTime, 60)

Downloading of all 500 stocks did only take:

println("elapsed time: ", int(mins), " minutes, ", ceil(secs), " seconds")

elapsed time: 3 minutes, 45.0 seconds

Now we convert the data of type Timedata to type Timenum and store the result in the EconDatasets data directory:

valsTn = convert(Timenum, vals)

pathToStore = joinpath(Pkg.dir("EconDatasets"), "data", "SP500.csv")

writeTimedata(pathToStore, valsTn)

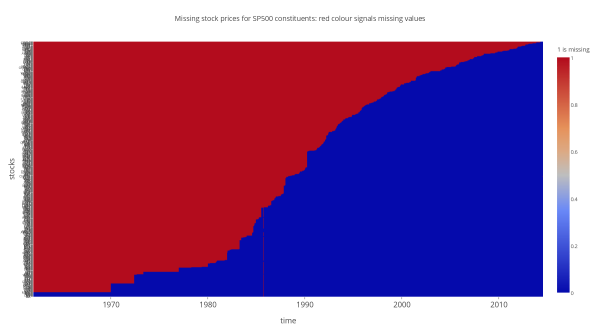

3 Visualize missing values

From previous experiences I already know that the saying “you get what you pay for” also holds for the free Yahoo!Finance database: the data comes with a lot of missing values. In order to get a feeling for the data quality, we want to visualize all missing values. Therefore, we sort all assets with respect to the number of missing values.

(nObs, nStocks) = size(valsTn)

NAorNot = Array(Int, nObs, nStocks)

for ii=1:nStocks

NAorNot[:, ii] = isna(vals.vals[ii])*1

end

nNAs = sum(NAorNot, 1)

p = sortperm(nNAs[:])

tickSorted = tickerSymb[p]

NAorNotSorted = NAorNot[:, p]

For the visualization, we rely on plot.ly, as we get an interactive graphic this way. This allows the identification of individual stocks in the graphic by simple mouse clicks. If you want to replicate the figure, you will need to sign in with an own and free plot.ly account.

Pkg.clone("https://github.com/plotly/Plotly.jl")

using Plotly

## sign in with your account

## Plotly.signin("username", "authentication")

nToPlot = nStocks

datsStrings = ASCIIString[string(dat, " 00:00:00") for dat in valsTn.idx]

data = [

[

"z" => NAorNotSorted[:, 1:nToPlot],

"y" => tickSorted[1:nToPlot],

"x" => datsStrings,

"type" => "heatmap"

]

]

response = Plotly.plot([data], ["filename" => "Published graphics/SP500 missing values", "fileopt" => "overwrite"])

plot_url = response["url"]

Although the resulting graphic easily could be directly embedded into this html file, you will need to follow this link to watch it, since the plot.ly graphic is a large data file and hence takes quite some time to load. Nevertheless, I also exported a .png version of the graphic, which you can find below. Thereby, red dots are representing missing values.

4 Get logarithmic returns

So now we already have our closing price data at our hands. However, in financial econometrics we usually analyze return data, as prices are non-stationary almost always. Hence, we now want to derive returns from our price series.

For this step I rely on the also not yet registered Econometrics package:

Pkg.clone("https://github.com/JuliaFinMetriX/Econometrics.jl.git")

using Econometrics

The reason for this is that I can make use of function price2ret then, which implements a slightly more sophisticated approach to return calculation. This can best be described through an example, which we can set up using function testcase from the TimeData package:

logPrices = testcase(Timenum, 4)

| idx | prices1 | prices2 |

| 2010-01-01 | 100 | 110 |

| 2010-01-02 | 120 | 120 |

| 2010-01-03 | 140 | NA |

| 2010-01-04 | 170 | 130 |

| 2010-01-05 | 200 | 150 |

As you can see, the example logarithmic prices have a single missing observation at January 3rd. Straightforward application of the logarithmic return calculation formula hence will result in two missing values in the return series:

logRetSimple = logPrices[2:end, :] .- logPrices[1:(end-1),:]

| idx | prices1 | prices2 |

| 2010-01-02 | 20 | 10 |

| 2010-01-03 | 20 | NA |

| 2010-01-04 | 30 | NA |

| 2010-01-05 | 30 | 20 |

In contrast, function price2ret assumes that single missing values in the middle of a price series are truly non-existent: there is no observation, because the stock exchange was closed and there simply was no trading. For example, this could easily happen if you have stocks from multiple countries with different holidays. Note, that this kind of missingness is different to a case where the stock exchange was open and trading did occur, but we were not able to observe the resulting price (for a more elaborate discussion on this point take a look at my blog post about missing stock price data). Using function price2ret, you ultimately will end up with a stock return series with only one NA for this example:

## get real prices logRetEconometrics = price2ret(logPrices; log = true)

| idx | prices1 | prices2 |

| 2010-01-02 | 20 | 10 |

| 2010-01-03 | 20 | NA |

| 2010-01-04 | 30 | 10 |

| 2010-01-05 | 30 | 20 |

So the final step in our logarithmic return calculation is to apply price2ret to the logarithmic prices, specify the usage of differences for return calculations through log = true, and multiply the results by 100 in order to get percentage returns.

percentLogRet = price2ret(log(valsTn); log = true).*100

Alternatively, you could get discrete returns with:

percentDiscRet = price2ret(valsTn; log = false).*100

Filed under: financial econometrics, Julia Tagged: data, sp500

![]()